Memory Cloud

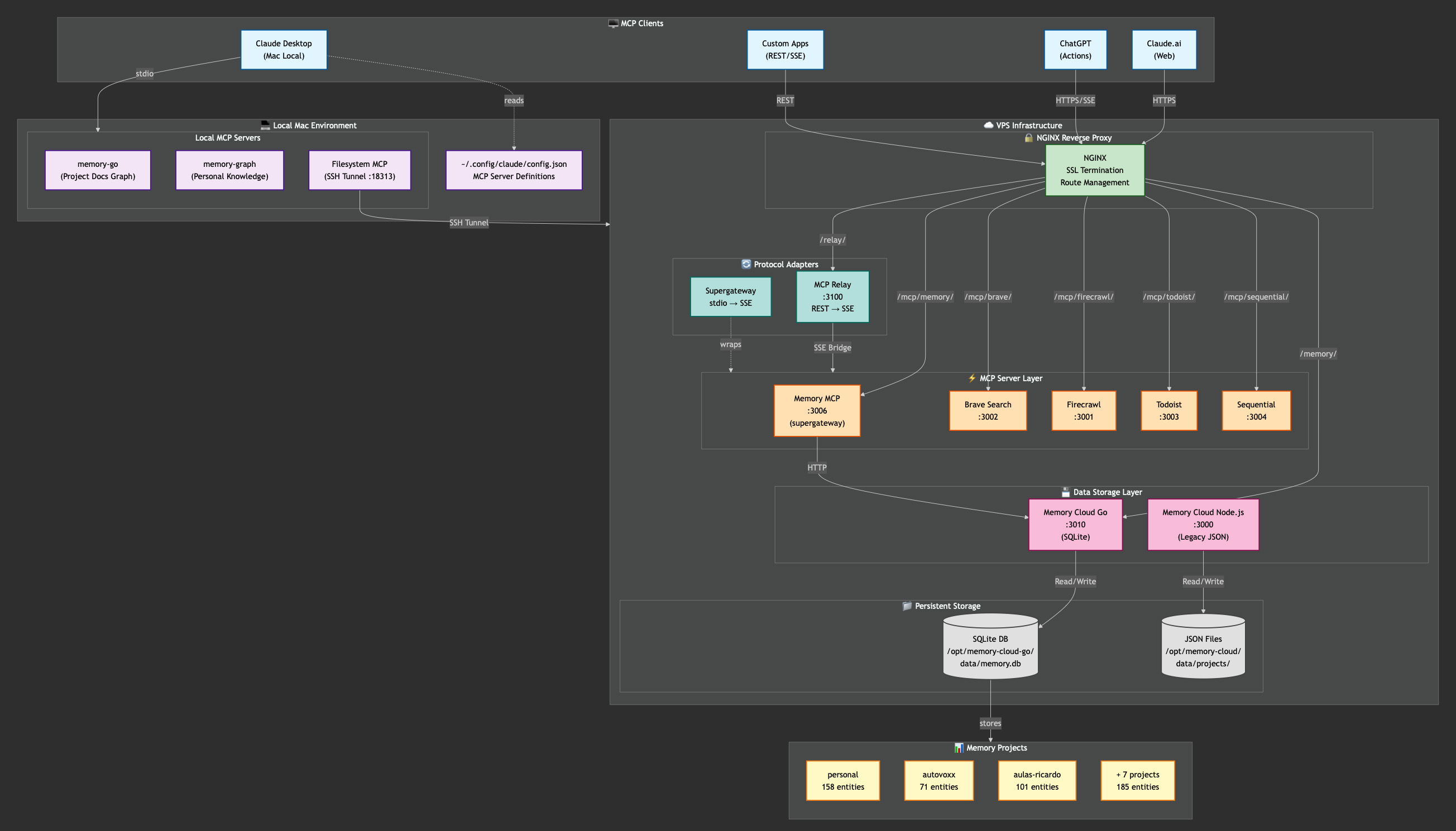

Distributed memory orchestration infrastructure for AI agents

Go | SQLite | MCP Protocol | NGINX | Docker | SSE | REST API

View on GitHub ↗Solution Architecture

Overview

Memory Cloud is an infrastructure I developed to solve a fundamental problem when working with LLMs: context loss between sessions. The system enables multiple clients (Claude, ChatGPT, custom applications) to access and manipulate knowledge graphs consistently and securely, maintaining persistent memory across conversations.

The Problem

AI agents lose context between sessions. Each conversation starts from scratch, forcing information repetition and losing valuable history. Existing tools didn't offer the flexibility to orchestrate multiple isolated memories per project with central synchronization.

How It Works

Architecture of multiple memories separated by project/context, with orchestration via central API. Each project has its own isolated graph in SQLite. The API coordinates access and synchronization between graphs when needed. Clients connect via MCP (Model Context Protocol) or REST, with NGINX handling routing and SSL.

Features

- • Knowledge graphs isolated by project

- • Go REST API with SQLite backend

- • MCP server for native Claude integration

- • Support for multiple simultaneous clients

- • REST→SSE bridge for universal compatibility

- • SSL/TLS via NGINX reverse proxy

- • Durable persistence with automatic backups

Stack: Go • SQLite • MCP Protocol • NGINX • Docker • SSE • REST API